NC dreams of a future where the world is connected with joy. The future includes practicing safe, transparent, and unbiased technology ethics to pursue righteous joy. To this end, NC fully understands the importance of AI technology development and ethical responsibility and actively managing its technology. In particular, it has established [NC] AI Ethics Framework to enable sustainable development of AI technology into the one based on ‘human values’.

The three core values of NC AI Ethics Framework include ‘Data Privacy’, ‘Unbiased’, and ‘Transparency’. NC pays attention to protect users’ data and to prevent social biases in the course of AI technology development and aims to develop ‘explainable AI’. Also, to enhance social awareness of AI ethics, it is carrying out multiple projects including discussion with the world’s renowned scholars, joint research with external parties, and research support. In this article, we will explain the reason that NC has selected ‘Unbiased’ as a core value and the activities that it is carrying out in relation to such a core value.

An Unbiased AI

According to the report released by UNESCO in 2019, ‘gender bias’ to enhance prejudice about gender appeared in various AI-based products. Also, according to the research result of MIT Media Lab, the number of facial recognition errors that occurred in certain races or genders was 30 to 40 times higher than the number in others. Bias occurs in many forms when we try to deal with data with diverse characteristics in AI learning. If such a bias has the risk to cause unfair discrimination or prejudice against a certain class, group, or individual in the society, we should handle the data in a more cautious and sensitive way. NC is making continuous efforts to develop unbiased technology and to apply it to its service through eliminating unethical expressions that cause discrimination, hatred, and prejudice in AI data and introducing a system to develop fair AI technology.

A Glossary of Unethical Expressions

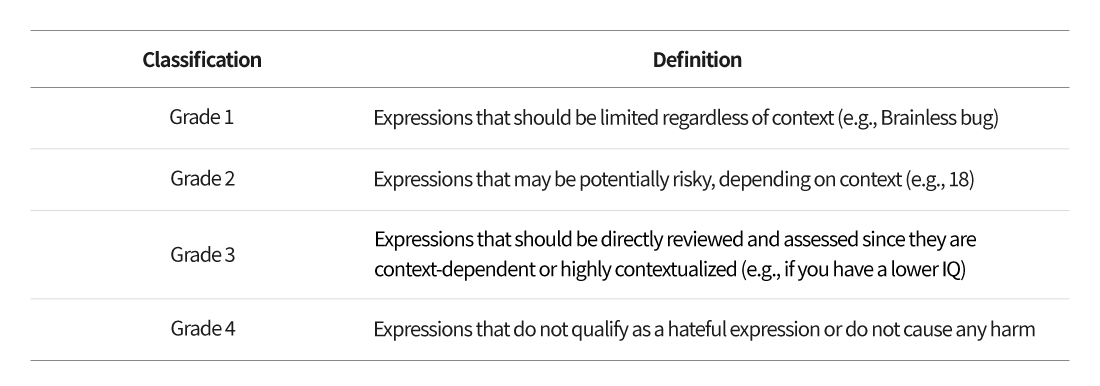

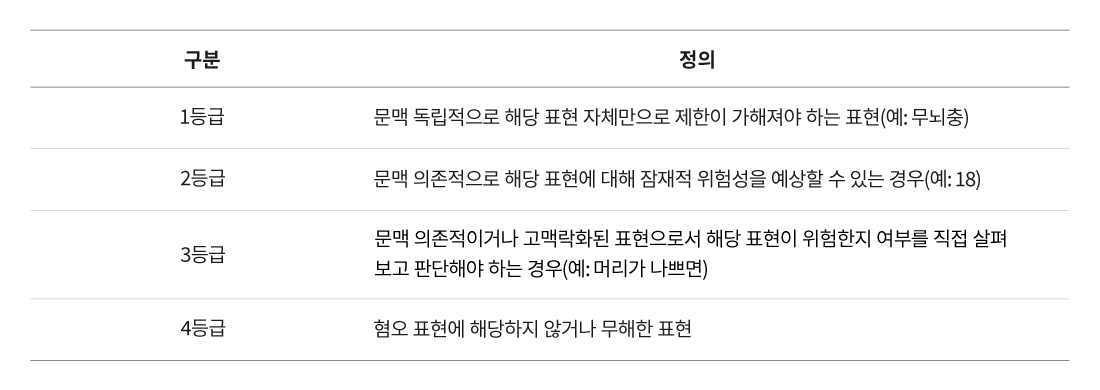

NC is establishing a ‘Glossary of Unethical Expressions’ that collects unethical expressions like curses and sexual harassments to eliminate such expressions that may exist in data and classifies them into different groups based on the classification system reflecting their type and severity. The glossary is used as filtering standards in the course of recognition of conversational data and creation of conversational output. Considering that conversational data may have different meanings depending on situations and characteristics, NC has given grades to ‘unethical expressions’ and developed responses for each grade.

There are unethical expressions that would be considered unethical by the general public; however, there are others that may be considered unethical depending on the context or the audience. In the chat service provided by PAIGE, strong emotional expressions are allowed to a certain extent, while there are some services that do not allow use of terms that involve strong emotional expressions. Therefore, the area requiring extensive application of the technology to prevent unethical expressions is conversational technology, because the terms that would be immediately considered unethical are easier to find and respond to, while others that would require consideration of the context need technological support to make decisions after considering the overall context.

NC’s NLP Center has collected unethical expressions since the beginning of PAIGE service. Since the beginning of 2021 when it set the target to filter hateful expressions, it has collected and classified unethical expressions in a more full-fledged manner. NLP (Natural Language Processing) is composed of NLU (Natural Language Understanding), an area concerning recognition, and NLG (Natural Language Generation), an area concerning utterance. To determine what to process, the hateful expressions inclusive in users’ statements or data should be discovered using NLU technology. Only through collecting hateful expressions as much as possible and training, AI may understand that the curse inclusive in the sentence created by a user is not just a word, but a curse. After then, researchers may develop conversational models serving various conversational purposes through NLG technology. The purpose of NLP Center is to develop technologies to create conversations that would give joy to users and ultimately, to prevent the finally generated statements from containing unethical expressions.

Giving Grades to Unethical Expressions and Their Filtering

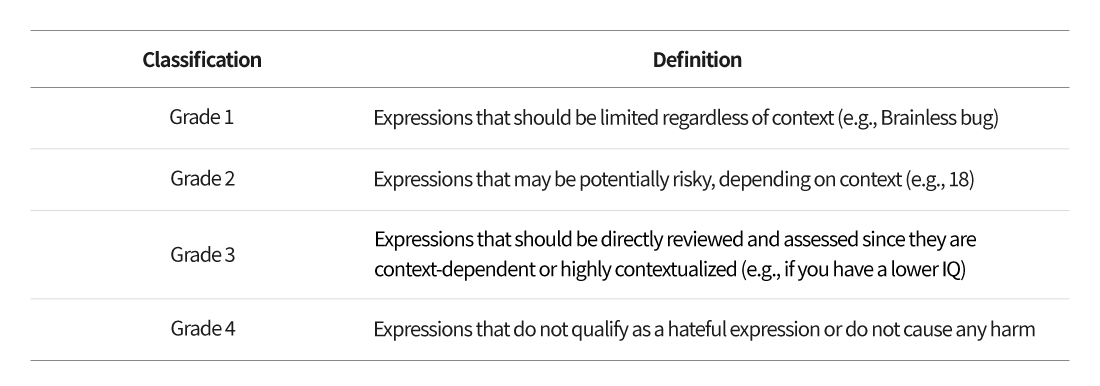

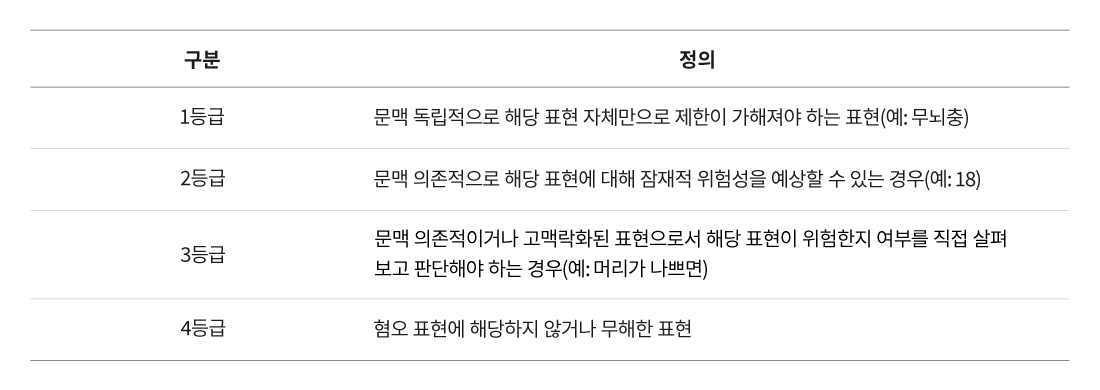

NC has developed responses through dividing unethical expressions into four different grades. The classification standards are as follows:

NC plans to develop and apply various technologies to filter unethical expressions for each grade. There are diverse technologies being developed, including the ones for pattern matching filtering focusing on terminology, filtering based on evaluation of statements that are similar to the expressions inclusive in the glossary of unethical expressions, and filtering based on context that is considered an unethical conversation. NC has applied the technology to filter Grade 1 terminology to PAIGE 4.0 and plans to apply the one to filter Grade 2 terminology to PAIGE2022. At the moment, NC is carrying out pre-service tests.

Also, as part of the process to filter unethical expressions, NC is separately managing alternatives of expressions to disparage certain races and nations that may cause political issues in the games where machine translation is serviced to make sure expressions would be made in the most neutral manner. NC is making efforts to immediately recognize international issues like the Ukrainian war and to reflect them on services. Even though the glossary of unethical expressions is not being used, the technology to filter the forbidden words inclusive the glossary managed by game operation teams will be applied to other games in a more extensive manner.

A System to Prevent Creation of Unethical Statements

In addition to preventing discriminating statements by the system including curses, NC has established a system to prevent creation of unethical statements to make sure that users would not find the system’s statement impolite through expanding the concept of unethical statements. In particular, it has classified the statements generated by AI into three, ‘Biased’, ‘Impoliteness’, and ‘Politeness’. Accordingly, NC has established a system to pursue the value of ‘Politeness’, instead of ‘Impoliteness’, even in the case of unbiased statements so that the system would have conversations respecting and caring for human beings.

Basically, the statements generated by AI are dependent on the AI’s learning data. To help AI understand that there are expressions containing prejudice, data containing prejudice should be included in its learning data in an unbiased manner. Learning of such data is within the scope of NLU research, while design of models to generate adequate conversations is within the scope of NLG research. The conversation with AI may seem like a one-to-one communication, but it is also a one-to-many conversation. In this case, we need to approach AI’s prejudice in a cautious manner. In case we reach the stage where AI would use contexts in a free manner like actual human beings, people would become more receptive towards AI’s statements reflecting prejudice.

The research towards the stage of ‘Unbiased’ was based on a passive defense strategy. In unethical conversations, there are people giving much meaning to the same word and reacting to them, while there are others that do not find the word so serious. Since there are varying levels of sensitivity between individuals depending on their thoughts and experiences, if we only regulate unbiased conversations, we would reach the conclusion that we do not have to hold ourselves responsible for many parts, including the sense of hatred created based on context. However, in many cases, the conversations that cause dispute between users are the ones based on context. Therefore, NC has set the target to design conversations that users would not find impolite in addition to enabling conversations that would not be considered biased.

AI is still learning people’s words, and therefore, it may be considered to make polite statements. To make sure that AI would have adequate interactions with human beings, NC is studying technology and making various attempts to control impolite statements. It is studying ways for AI to suggest different topics or to avoid having conversations and to use conversations strategies in an adequate manner when users express prejudice. In other words, AI would not react to people’s expressions using biased statement and stay polite through not making impolite expressions, including less respectful statements or lack of response to people’s questions. ‘Politeness’ is a goal for conversational research.

Testing of AI’s Impact on Fairness and Bias

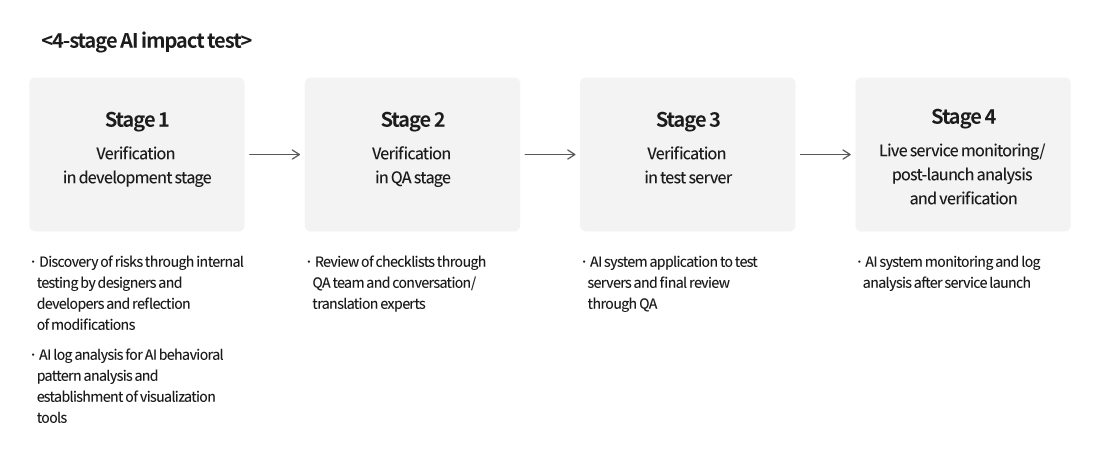

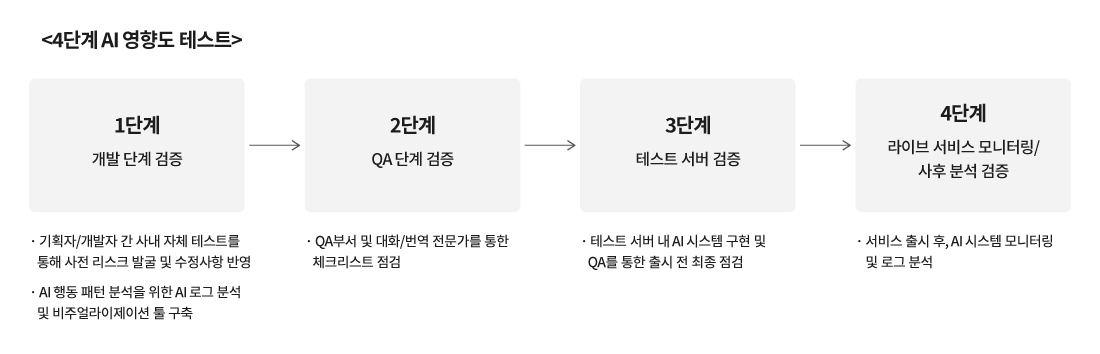

NC is developing a fair and unbiased AI system to provide positive playing experiences to customers. To this end, it is developing various test scenarios and a 4-stage AI impact test when developing an AI service. During the development phase, personal information or unethical expressions would be filtered out based on patterns. However, it is difficult to apply uniform rules, since the same words and numbers may be interpreted as curse depending on the context. Since it is impossible to treat all types in a perfect manner at the same time, NC carries out multiple verifications through conducting AI impact tests in all development stages. The 4-stage test is to test the AI impact throughout the processes before and after technological development and service, including the data generation stage, module test stage, and service stage and to address unexpected problems.

For Digital Human Technology That Goes Beyond Overcoming Discrimination to Achieve Politeness

At the moment, NC is carrying out tests to apply the system to prevent generation of unethical statements to services; it is at the stage to test ‘to which extent it may prevent unethical statements and which unethical statements it cannot prevent’. Over the short term, NC’s NLP Center aims to complete the tests and to apply them to services, and its long-term goal is to internally develop systematic quality assurance method that is well-suited for chats in Korean language and to develop quality technology for filtering of unethical expressions, since there is not enough research on testing of unethical expressions at home. NC is making continuous efforts to collect alternatives for political expressions used in machine translation and to delete and mask various advertisements and curses in game chats. Also, it aims to apply spam (prohibited) word system to all IPs to make sure that users would be able to enjoy playing games in an even cleaner environment. All of these efforts will contribute to creating ‘righteous joy’ for users. Moreover, NC will continue its research for application to digital human technology, since the AI should not only refrain from making biased statements, but also be considered polite by users to truly reach the stage of conversation.

NC is making such efforts, because in order for AI technology to be considered as an ethical participant of the society, it should not cause any discrimination and the services based on AI should not negatively affect or isolate certain individuals. Moreover, AI researchers and developers should continue to ask themselves ‘what is considered unethical’. These efforts will lay foundation to help people and society to maintain unbiasedness and to share the sense of fairness.

Facebook

Facebook  Twitter

Twitter  Reddit

Reddit  LinkedIn

LinkedIn  Email

Email  Copy URL

Copy URL