NC has been consistently investing in AI technology for over 10 years, achieving outstanding research results in all facets of AI. Currently, it has grown to a level where it can generates sufficient synergy by combining with various creative industries, including games.

Previously, [TECH Standard] series highlighted NC’s exceptional technological prowess in the gaming industry. In this episode, we will discuss NC’s future, which go beyond just technological capabilities, creating new value, and share its insights on the direction of gaming technology and its vision.

R&D, the Value of Its Exploration

If the future were predetermined, an R&D organization might not be necessary. However, in reality, unexpected events often occur. Thus, it is imperative to have an organization that can explore the future in advance and set a direction. This is precisely the role of NC’s R&D organization. They are looking further into the future and exploring the uncertain world more than ever before.

NC’s R&D organization has been carrying on its remarkable history for over a decade, applying its results to NC’s games. For instance, <Lineage W> is the world’s first MMMORPG game to be served as a “global one-build.” AI translation technology played a crucial role in achieving this milestone, as it automatically translates game chat, breaking down language barriers between global players, and promoting a genuine sense of connectivity within the game. Owing to continuous investments in exploration through R&D, NC has continued to set new standards that were not previously seen in existing games.

NC's next objective is to create digital humans that can interact in real time and enrich the value of human creativity and enjoyment. To accomplish this, NC has already established a research organization equipped with all the necessary technical components for digital human development, including AI and NLP, and is now poised to progress towards the future.

Introducing NC’s Digital Human for the First Time: Digital TJ in Project M

Every year in March, the Game Developers Conference (GDC) is held in San Francisco, where Epic Games announces games being developed using Unreal Engine. This year, NC was invited to the conference. Although not yet available to the public, NC has been utilizing the latest Unreal Engine 5 for game development. This invitation presented NC with an excellent opportunity to showcase its cutting-edge technology to a global audience.

At the GDC, NC aimed to unveil its digital human technology and upcoming game, Project M. After careful consideration, NC created a scenario in which digital TJ enters the world of the Project M game to effectively showcase both technologies.

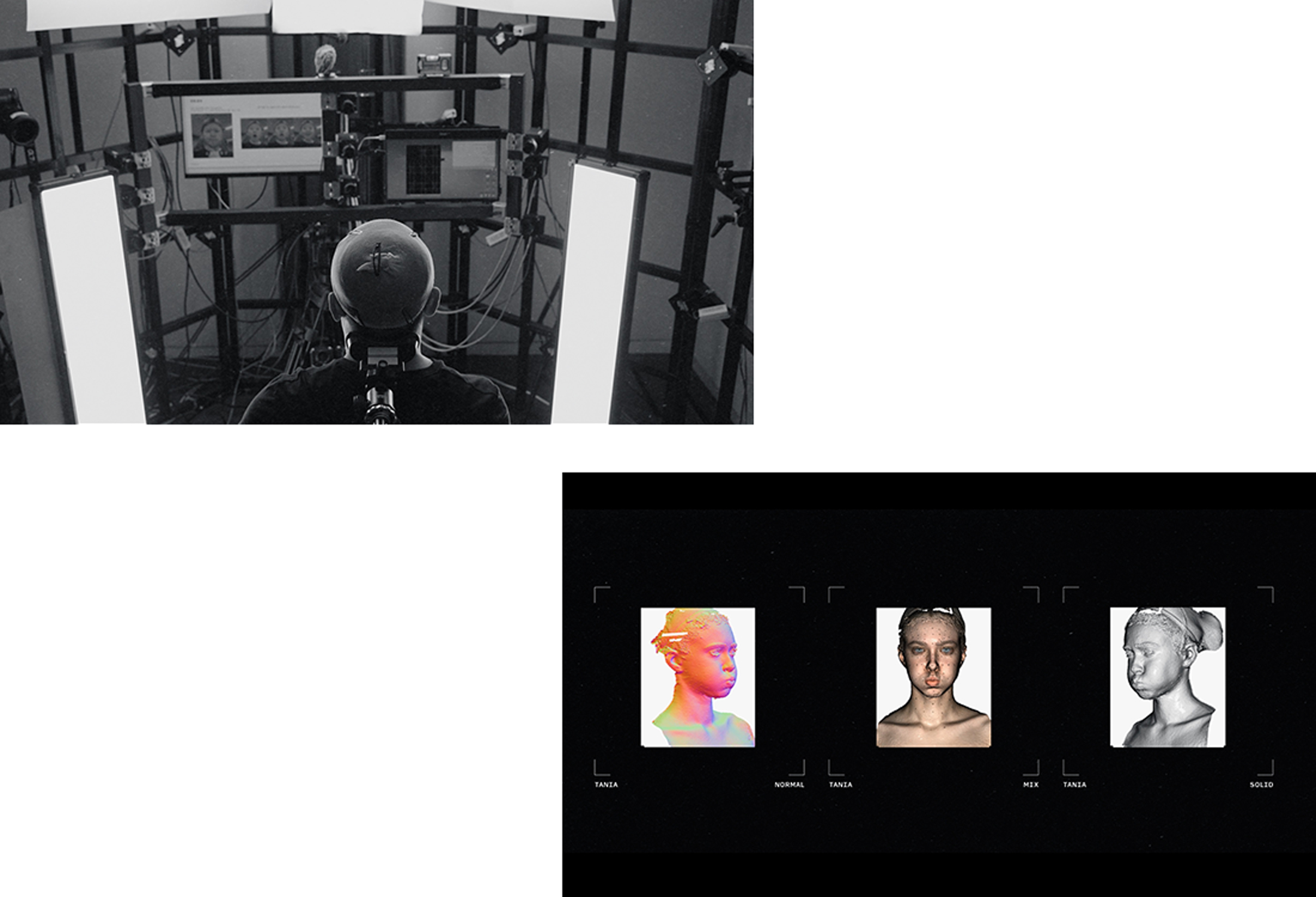

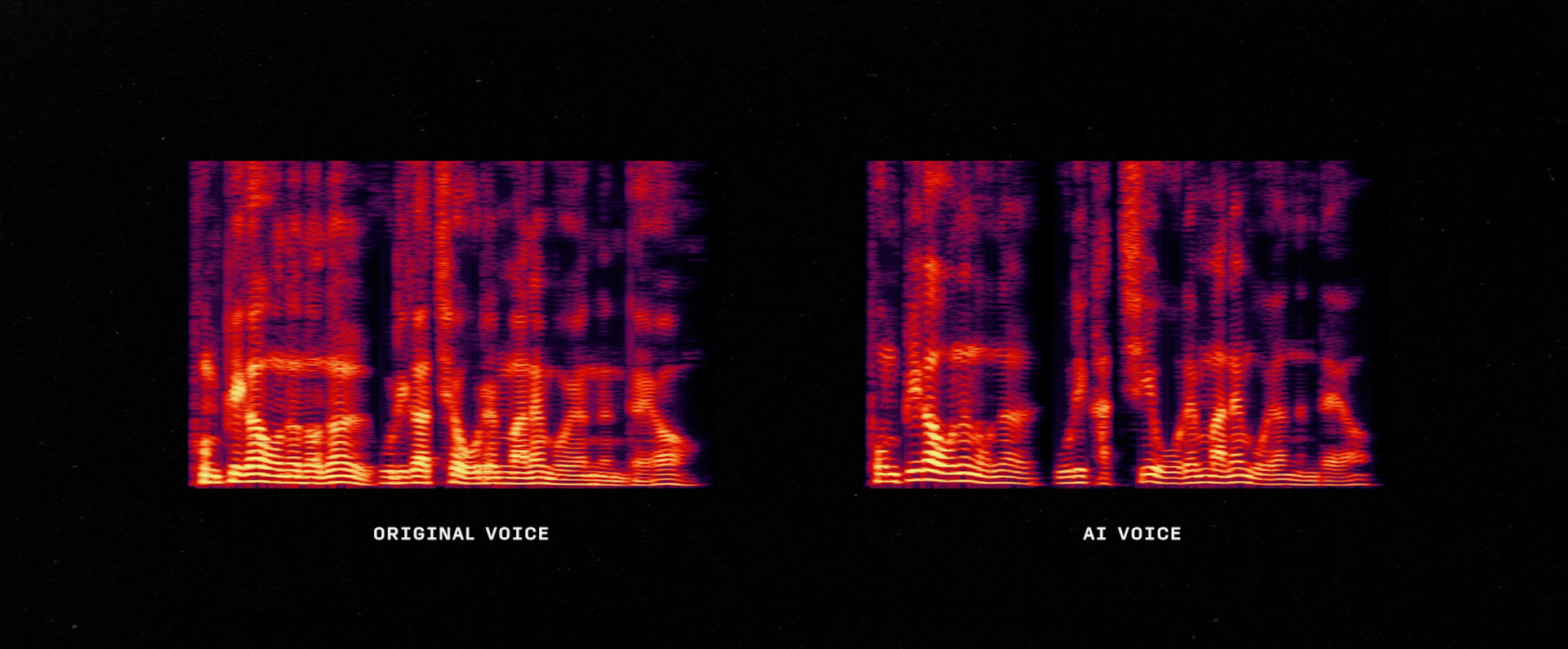

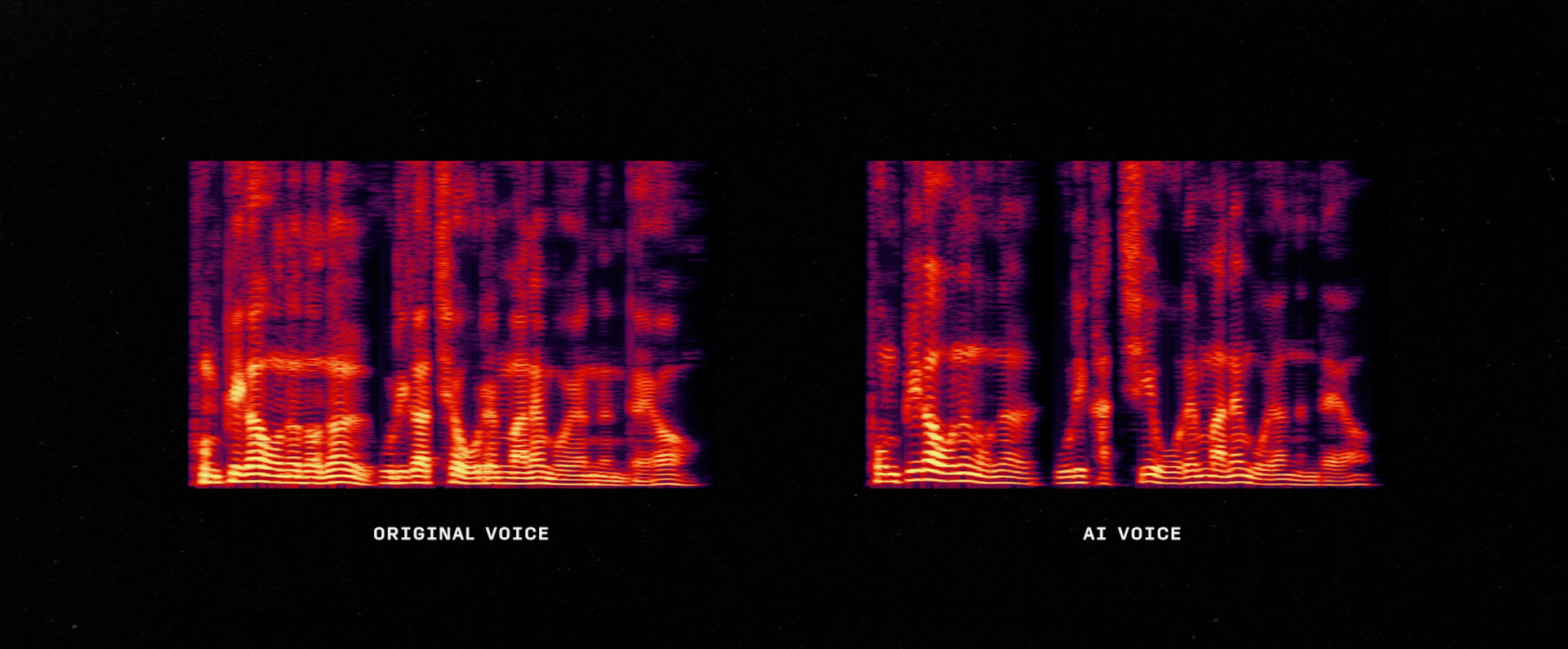

The digital TJ character was not only realistically replicated but also remodeled to fit the game. To achieve this, NC utilized a variety of AI technologies, including AI Voice and AI Voice-to-Face, and advanced hardware equipment such as 3D scanning and 4D capture.

NC has employed all the technologies it has been developing, enabling the extraction and modification of realistic human facial expressions. This impressive accomplishment is a direct result of NC's technological expertise.

Why NC Pays Attention to Digital Human

The world within a game is a virtual space of its own, and the relationship between the game and digital humans is fundamentally inseparable. The virtual space is an area where digital humans can actively engage, and well-implemented digital humans make the worldview of this virtual space all the more appealing.

However, until now, the digital human technology has only been limited to the role of providing information to users. As the reactions to a user’s actions were simple, it was challenging to form empathy or experience the fun of unexpected reactions.

Playing MMORPGs with others can be more enjoyable than playing alone because it allows players to interact with one another, creating a higher level of fun. Therefore, the interaction with digital humans is an essential element that cannot be overlooked in games. If digital humans undergo a deep thought process similar to that of communication with people when taking actions, the resulting reactions will vary depending on the situation. In other words, a presence that can provide enjoyment depends on how capable the interacting subject is of deep thinking. Therefore, it is important to create digital human that can interact as deeply as people do in the future.

NCSOFT has been providing rich capabilities required for digital humans such as AI and cutting-edge graphics technologies, as well as excellent art resources. Now, the next phase for NCSOFT is to seamlessly merge these technologies to create even more advanced digital humans.

AI Technology for Achieving the Ideal Interactions

The digital TJ that NC showcased at GDC is just a preview of the many technologies NC plans to introduce in the future. The ultimate objective of NC's digital human development is to create beings that can not only listen, talk, and move like humans but also think and make decisions based on the situation and respond accordingly. These digital humans should be capable of interacting with humans.

The goal of digital human technology is to create a sense of “presence” and provide an immersive experience through interaction. Presence refers to the ability to create a visual representation that gives the impression of an actual human moving. Interaction refers to a technology that enables digital humans to make natural facial expressions, movements, subtle gestures, and biological responses just like humans, enhancing the sense of immersion as if one is conversing with a real person.

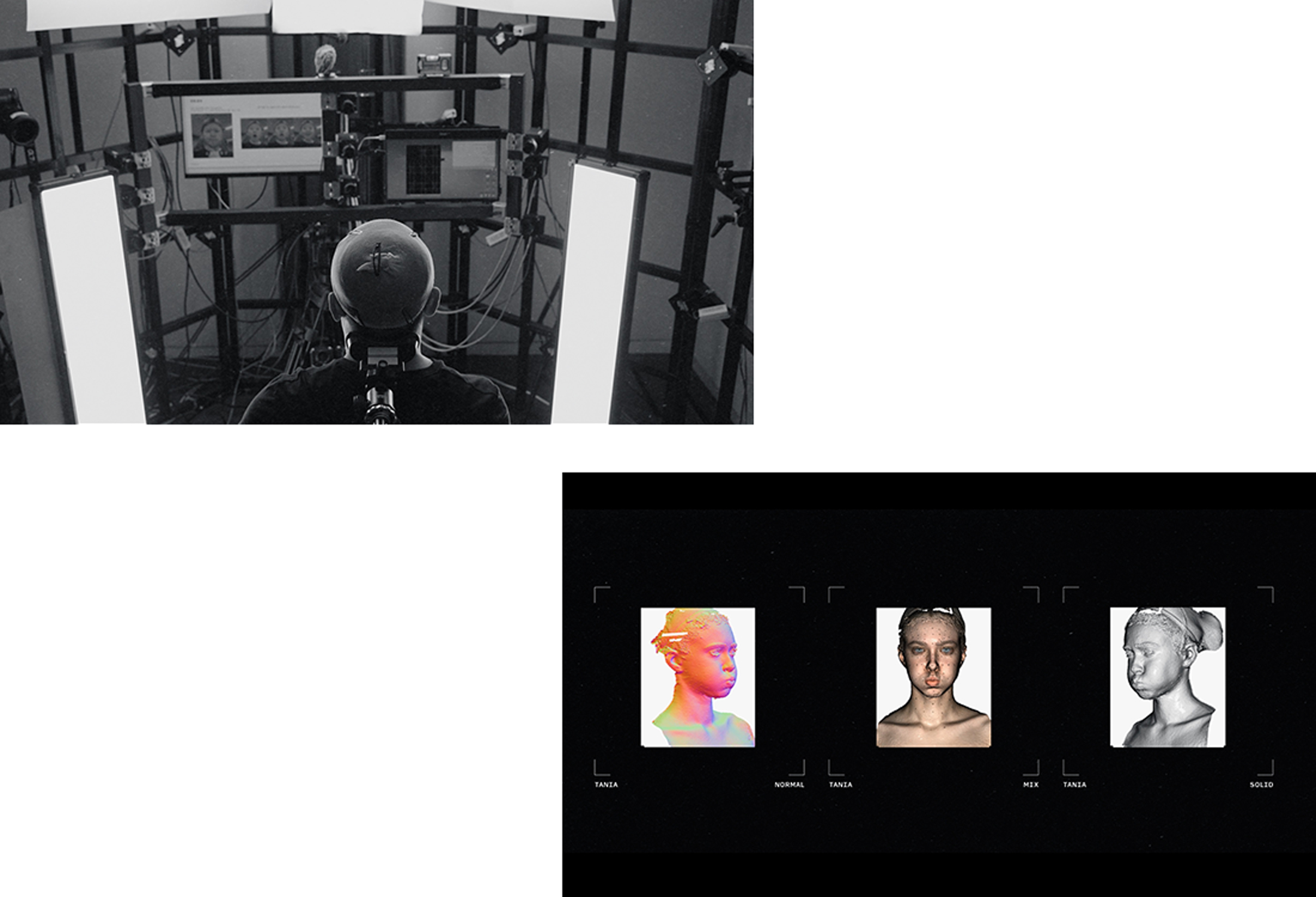

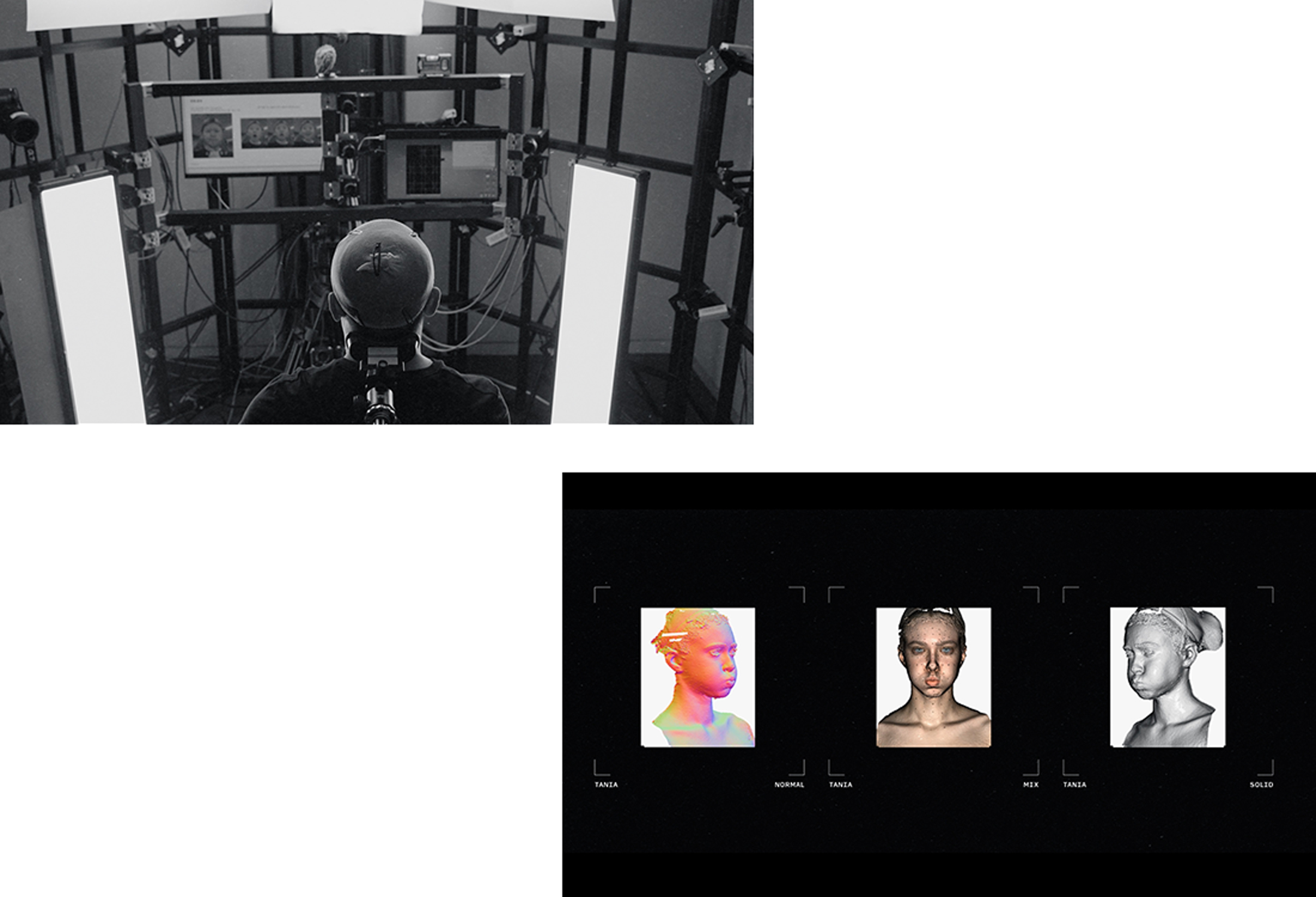

The Core Technology for Actual “Presence” in Reality: 4D Scan

To achieve these goals, NC relies on exceptional scanning technology. In this regard, NC has completed preparations to actively utilize the 4D scanner that the company has independently developed. Unlike the previous method of extracting 3D facial information as images, the 4D scanner is the next-generation technology of 3D scanning. It captures facial information as a video, enabling to record facial expressions and movements of digital humans in real time.

4D scanning technology not only uses image information like 3D scanning, but it can also capture subtle movements such as facial wrinkles and small muscle movements through continuous shooting. During continuous shooting, all cameras must have a permissible error of within 1 millisecond (1/1,000 second), and the lighting must be uniformly adjusted to ensure equal lighting. Through this meticulous process, data can be extracted to achieve more natural facial expressions and movements.

Three Key Technologies for Enhancing “Sense of Immersion”

As mentioned earlier, interaction is what increases immersive experiences. To achieve this, three key technologies are crucial: Multimodal Recognition AI for cognitive capabilities, Conversational AI for chatbot technology, and Visual Acting AI for acting skills.

Multimodal Recognition AI is a technology that detects and analyzes various factors such as the speaker's conditions and emotions during a conversation. Conversational AI, on the other hand, analyzes the speaker's speech to generate appropriate responses. Visual Acting AI incorporates technologies like voice synthesis and graphics to effectively express the aforementioned factors. Using these technologies, digital humans can comprehensively understand what conversational partner is saying, but also their condition, and provide appropriate responses while expressing nonverbal communication such as facial expressions or subtle gestures throughout the process.

The New Digital Human Production Pipeline

NC's goal is not limited to creating a digital human on a one-time basis but also to establish a pipeline for producing advanced digital humans more easily and quickly in large quantities. The ultimate objective is to make digital humans an efficient technology that can be used as a versatile intellectual property in every field, not just by industry experts. Furthermore, NC plans to utilize the technologies and resources produced during this process in various business areas.

To achieve this goal, NC is focusing on automating and streamlining complex and time-consuming processes using various AI technologies. Specifically, the pipeline for creating digital humans capable of real-time interaction is divided into two parts. One is a traditional graphics pipeline primarily used in movies and games to create high-quality digital humans that can excel in all areas, such as games, movies, dramas, entertainment, and real-world services. The other is a pipeline that maintains consistent digital human quality while mass-producing them, with a focus on cost and time efficiency based on AI technology. NC aims to reach a level where any users can easily possess their own digital humans.

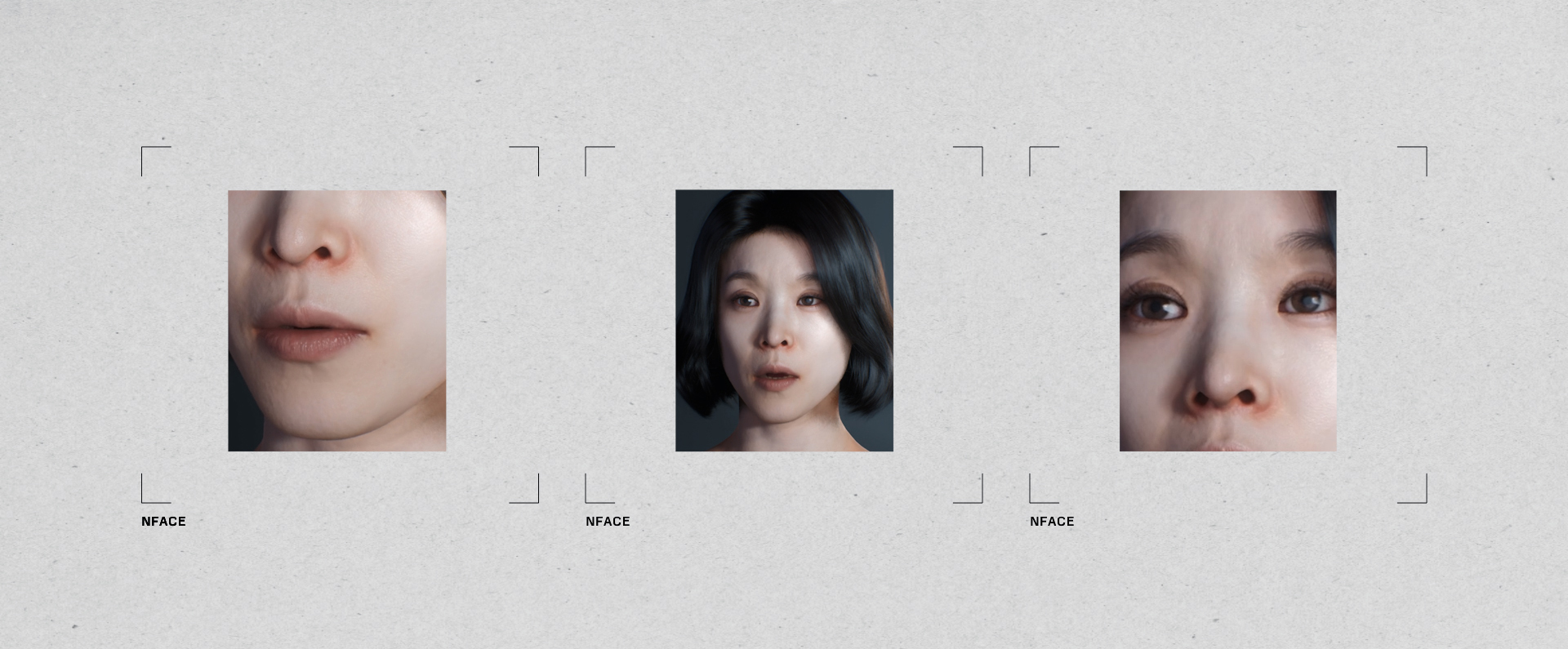

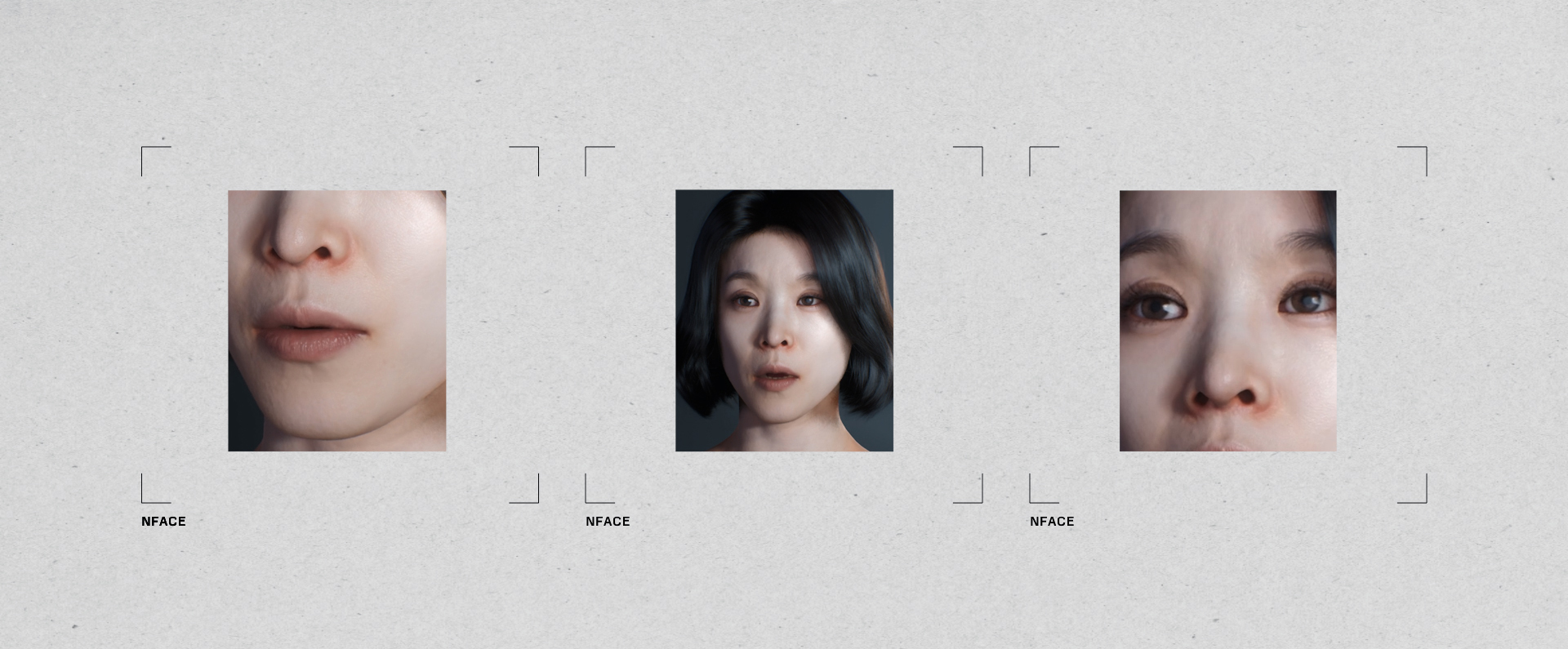

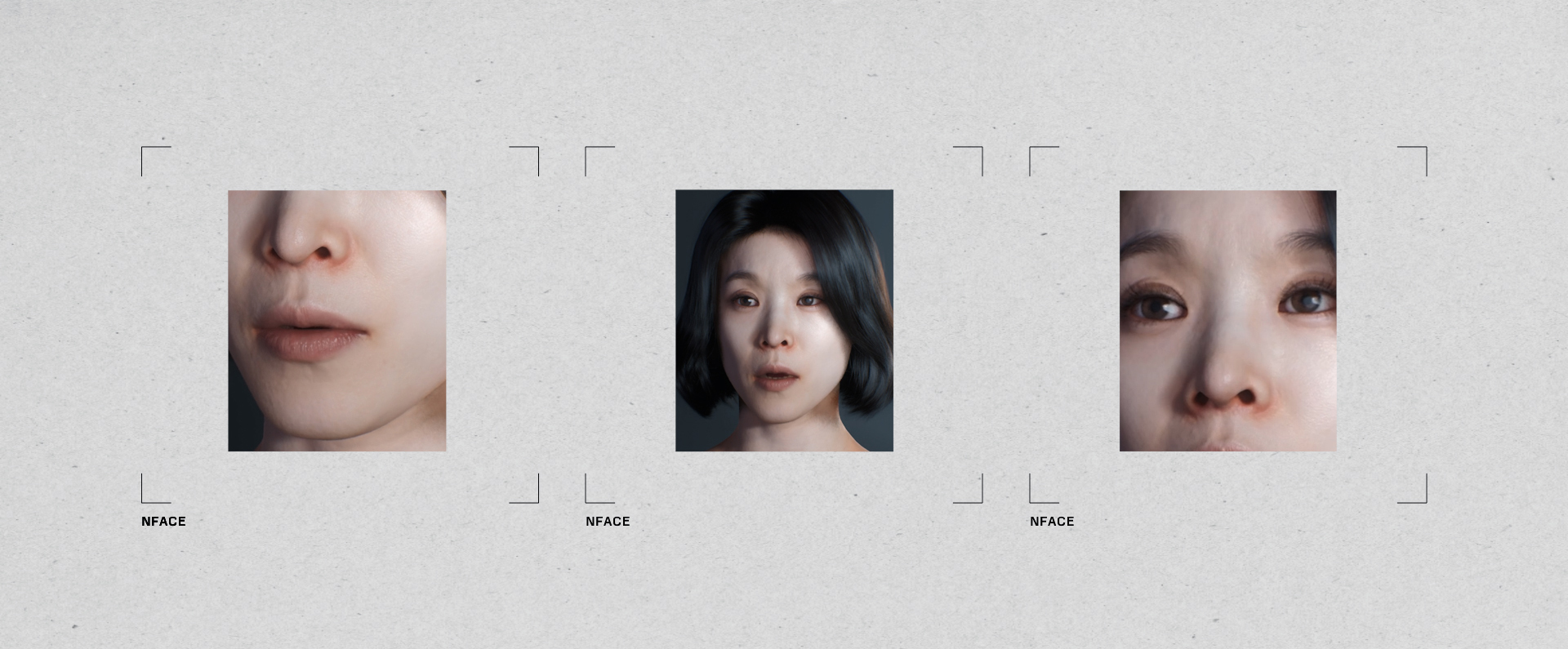

The Core Technology for AI-Based Pipeline 1: NFace

NFace, an AI technology developed by NC, can automatically generate appropriate facial expressions and lip movements when given dialogue or a voice. With NFace, high-quality Lip Sync animations can be generated at a motion capture level with low cost, as long as a voice and a key pose are provided. The technology can also generate other motions such as eye gaze, head movements, and eye blinking, and even create animations that reflect simple emotional states on the faces. NC has built its own system to optimize this technology for Lip Sync in the Korean. This technology is expected to be utilized not only in games, but also in fields such as movies and animations, and etc.

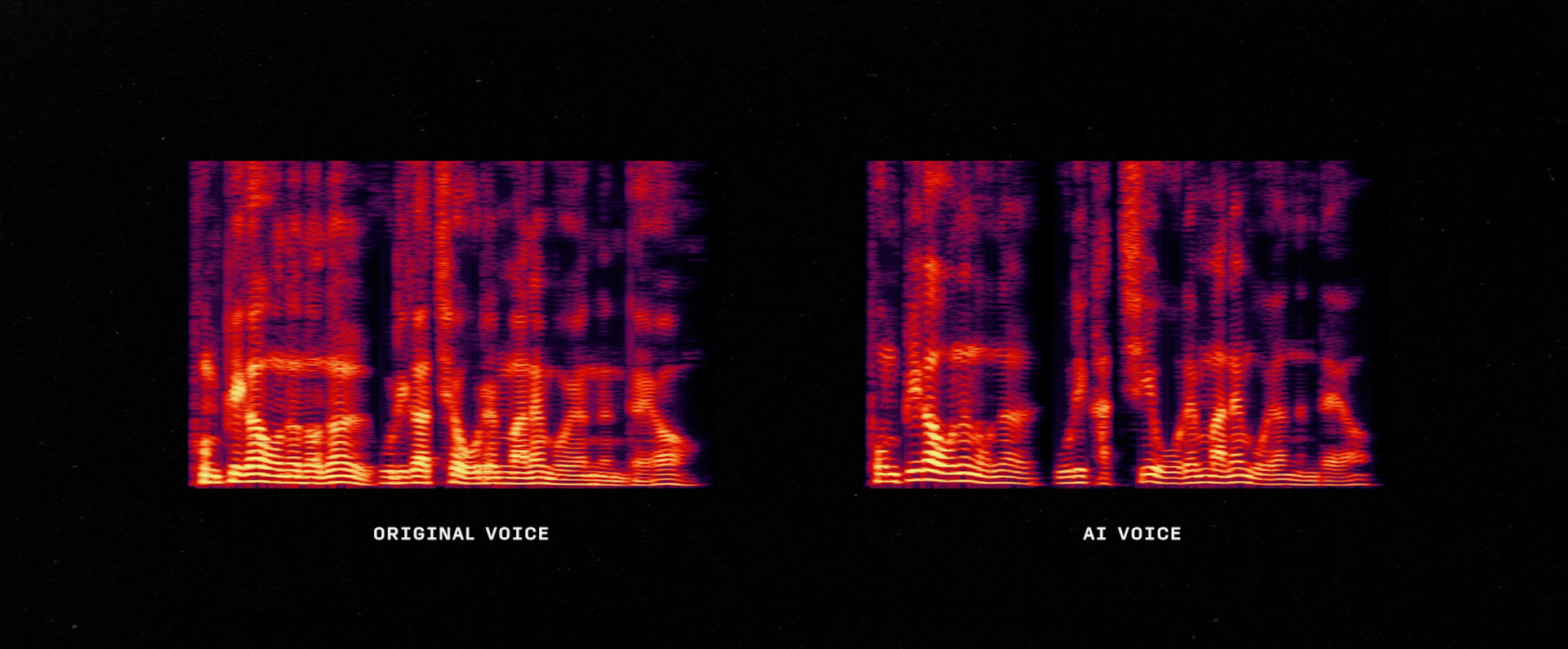

The Core Technology for AI-Based Pipeline 2: TTS

TTS (text-to-speech) is an AI technology that converts inputted text into spoken words using a voice that suits the given situation. To enable TTS to be about to speak text, a process of training data is required, which involves paired text and voice data. For instance, the process of training involves learning the relationship between the text “hello” and how it is spoken in voice. During this process, TTS also learns the characteristics of the recorded person’s voice, such as their speech tone, accent, pitch, and speech tempo. Once the TTS completes its training, it can read any text in the recorded person’s voice and speaking style. It only takes 30 minutes of voice data to create a TTS. The resulting TTS can be used for various purposes such as navigation, announcements, audio books, and YouTube content. Future research aims to improve TTS's ability to pronounce text with a more natural intonation and generate a clean voice without any noise.

The Digital Human We Will Meet

NC is actively working towards developing digital humans that can interact with people naturally, even in various ambiguous situations and on different topics. This technology holds significant promise for use in games where interaction is key. With this technology, players can encounter game partners, one-of-a-kind competitors, or special heroes who they may never meet again in their lives instead of NPCs, repeating the same responses. Such digital human technology has the potential to create a new genre of games that has never been seen before. NC is committed to dedicating its efforts and time to pursuing the common goal of creating digital humans that people will look forward to.

Facebook

Facebook  Twitter

Twitter  Reddit

Reddit  LinkedIn

LinkedIn  Email

Email  Copy URL

Copy URL